Cloud computing for AI is no longer just a technical option. In fact, behind today’s Generative AI, there is a hard infrastructure reality. AI progress is driven not only by algorithms, but also by massive, measurable compute.

Because of that, in other words, cloud as the AI gateway is a practical path to build AI at scale. So, this article explains the “why” in a simple way.

Why Cloud Computing for AI Becomes the Default

Cloud is not just a storage vault. It has evolved into the core layer that makes large-scale AI possible.

There are three key reasons:

- on-prem limits for modern AI,

- better cost control,

- flexible scaling.

On-Prem Barriers for Modern AI

Training AI can be extreme

Modern AI workloads can be too heavy for classic on-prem setups. Training a large language model may require thousands of GPUs running in parallel for weeks.

As a result, building a private data center can be financially risky. Hardware, cooling, and maintenance costs create a high barrier to entry. Therefore, cloud computing for AI becomes a more realistic start.

Trust note: not every AI project needs LLM-level training. So, you should map your use case first. Then you size your infrastructure.

Cloud as the AI Gateway: “Supercomputer Access” on Demand

Rent what you need, then turn it off

In this model, infrastructure is not an asset you must buy. Instead, it is a utility you can rent. You can “spin up” compute during training, then shut it down when done.

This is how cloud as the AI gateway removes the logistics barrier. It turns a hard build into an operational choice.

Cloud Computing for AI Improves Cost Flexibility

From CapEx to OpEx

Cloud adoption shifts spending from heavy upfront CapEx to more flexible OpEx. This helps cash flow. It also helps speed.

Hardware becomes obsolete fast

AI hardware can become obsolete in a short time, often in under three years.

With cloud, the depreciation risk sits more with the provider, not the AI team. So, you can move to newer processors sooner.

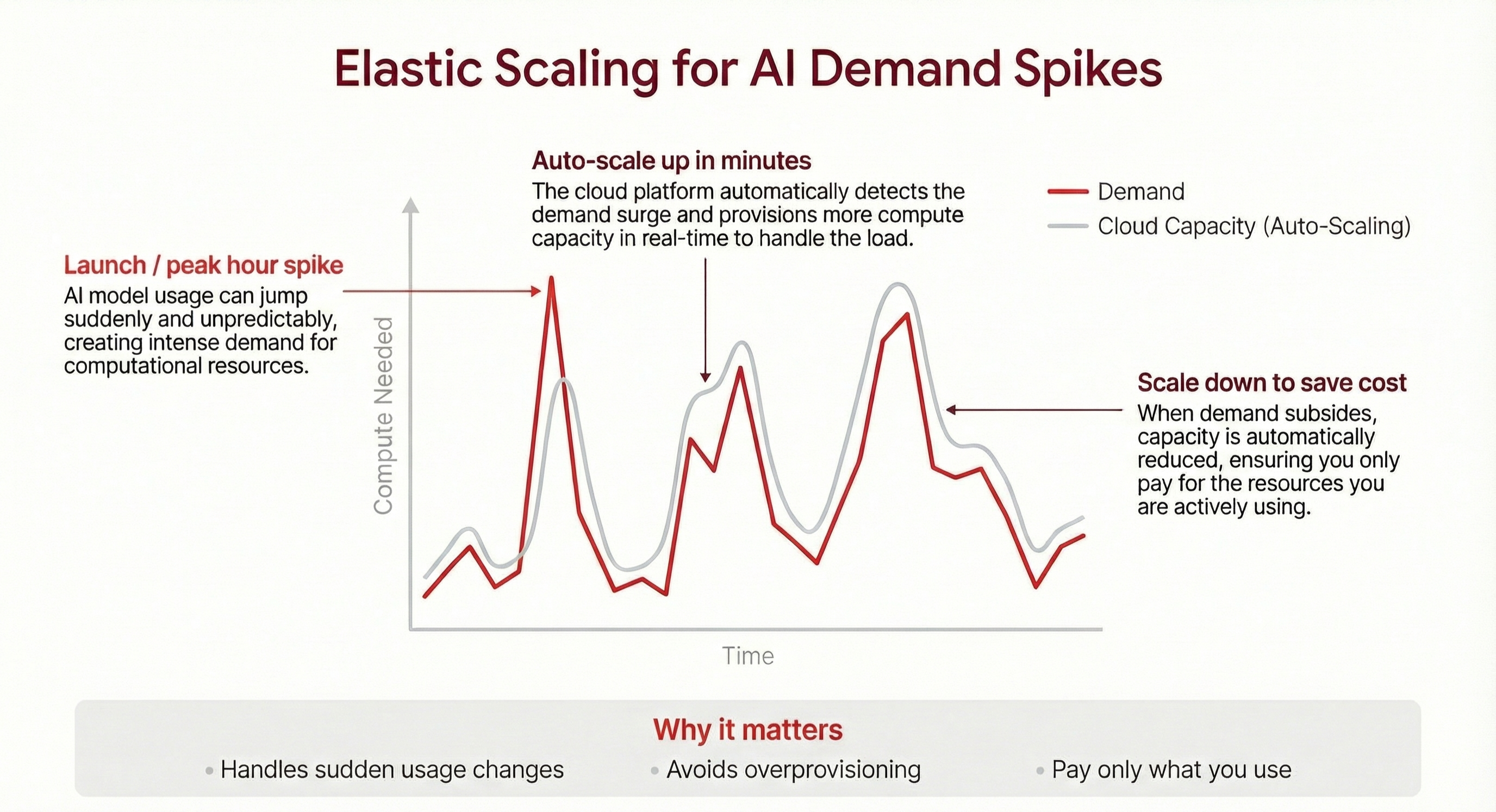

Elastic Scaling for AI Demand Spikes

Auto-scaling helps when traffic changes

AI demand is often unstable. At launch or peak hours, compute needs can jump fast. Cloud enables auto-scaling, so capacity grows and shrinks in real time. Because of that, cloud computing for AI fits modern product cycles.

Conclusion

Your point is clear: the future of AI is not in the office server room. It is in the cloud. So, the key question is not “Do we need cloud?” It is “How fast can we migrate AI workloads?”

If your team runs AI, analytics, or heavy business apps, Indonesian Cloud can help you assess the right setup, plan scaling, and keep costs predictable. Contact Indonesian Cloud for a quick assessment and a best-fit cloud recommendation.